Call for challenges

Challenges have become an essential part of research in medical imaging. Challenges pose a problem and solicit solutions from participants all over the world; each solution is validated on the same test data, making for a fair comparison. Following MIDL's commitment to openness and transparency, hosting regular, high-quality challenges is a logical next step towards creating an active scientific community in the "midl" of methodological novelty and clinical impact.

MIDL is now soliciting proposals for medical image analysis challenges. We want to support several challenges every year. We will integrate these events with MIDL activities such as our online events and the yearly MIDL conference.

We aim to improve the quality of challenges and encourage the adoption of new elements in challenges that will make them more reproducible and make their output - the solutions that solve the task at hand in an efficient and effective manner — more reusable, for the research community, and for clinical end-users.

We have decided to partner with grand-challenge.org because this platform already offers many of the features that would make future challenges more reproducible, open and reusable. The platform grand-challenge.org and the MIDL challenges are supported by Amazon Web Services.

How to submit?

Requests for organizing MIDL challenges can be submitted continuously and will be processed on a rolling basis. To submit your application, follow this link. Make sure to indicate that the Affiliated event is MIDL, and make sure to include the Structured challenge submission form on the Structured Chalenge Submission System site.

What do you get from us?

If your challenge is selected as a MIDL challenge:

- You will be able to host the challenge on grand-challenge.org and the basic costs of 5000€ are waived. Please be aware that you still need to pay storing and computational costs.

- MIDL and grand-challenge.org will advise the organizing team and provide support for data collection and data annotation. The organizing team will have a point of contact from the MIDL Board.

- We will provide participants free access to large training data sets via the AWS Open Data Registry, and we will use Zenodo for making smaller data sets available.

- The best solutions to challenges will remain available as Algorithms on grand-challenge.org and can be accessed by any registered user of the platform to process new data and use this for research and development;

- Challenge organizers can provide free participation to the yearly MIDL conference for team members of top contenders;

- We may be able to provide prizes to the best performing team in the form of AWS credits;

- There will be a new Challenge Paper Track at the MIDL conference for papers relating to MIDL challenges. We expect this will attract more participants to your challenge. The deadlines and review process for this Challenge Paper track will be the same as for the Short Papers and will have a high acceptance rate;

- Organizers are invited to present their challenge and its results at the MIDL conference;

- MIDL and grand-challenge.org will actively promote the MIDL challenges via their websites, mailing lists, blogs, and social media channels.

What do we expect and would like to see?

MIDL challenges should:

- Address a relevant application or methodological problem around medical imaging with deep learning;

- Have a diverse organizing team, preferably from multiple centers and countries;

- Actively involve the research community, with a good forum, videos, tutorials (the focus of the challenge should be on a community effort to solve a problem it and collectively learn from the experience, rather than a competition resulting in proprietary solutions);

- Have a good quality training data set, preferably multi-center, preferably large. This set must have a permissive open license such as CC-BY. (Test data should also be high-quality and can be closed. We prefer if the test data would also eventually become available after the challenge is closed but this is not a hard requirement; we realize that some data is ideal for testing but cannot be shared for legal reasons, e.g., data from large clinical trials.)

- Require their participants to provide inference code for their solutions (a so-called type 2 challenge). This code (inference code including model weights) should have a permissive open-source license, be made available on GitHub, and contain a Dockerfile compatible with Algorithm submission of grand-challenge.org;

- Provide a tutorial-style baseline solution for participants to build upon;

- Run and be supported by the organizing team for at least five years (or taken over by a new edition of the challenge);

- Include the organization of at least 2 MIDL webinars (at the kick-off and at the end where top contenders also present, optionally a third mid-term event could be added);

- Require participants to submit an open access short paper that can be part of MIDL proceedings (we will investigate if we can use Open Review for this.)

- Preferably publish an open access Data or Protocol paper describing the challenge, the task, the data, how the reference was determined and the metrics were selected, etc.

- Have organizers write an open access overview paper, initially submitted as a preprint. This paper could be published in MIDL proceedings but other journals are also allowed; top teams must be allowed to co-author; the paper should be submitted shortly after the challenge ends.

The process

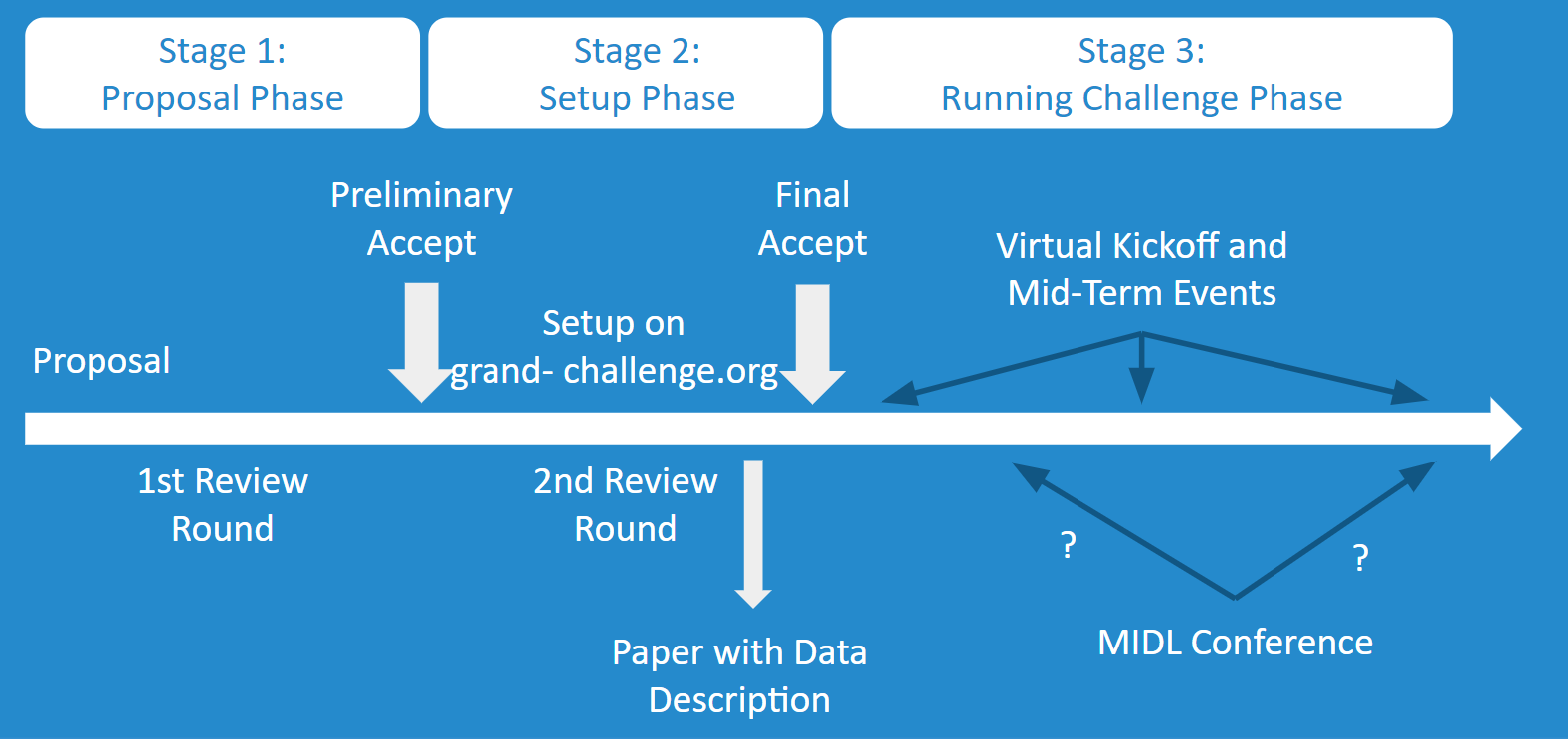

Proposals for MIDL challenges can be submitted continuously. Submissions will be reviewed within one month after submission by the MIDL Challenge Board. Part of this process is a communication between the board and the organizing team to clarify issues and co-create several aspects of the challenge. At the end of this phase a submission is rejected or tentatively accepted. In the latter case, the organizing team should prepare and possibly already publicly announce the challenge, complete the website, collect and annotate the data, prepare the evaluation protocols and the baseline solution. When the challenge is complete and ready to launch, a second round of review will take place. The timeline for the setup phase follows a flexible schedule, we do not want to create incentives to lower challenge's quality just to meet strict deadlines. When the challenge meets all requirements, it will be accepted, announced and opened for participation.

We expect MIDL challenges to run online for about four to eight months. After that the overview paper will be prepared, prizes awarded, and the challenge will go into a maintenance phase where for at least five years (or as long as teams regularly submit results; some challenges run for much longer) teams can still submit results. When the challenge is no longer attracting participation or is superseded by a new challenge it will be closed. We aim to accept about five challenges per year so that at any time there are about three MIDL challenges running and two or three upcoming.